A standard is a formally defined and documented technical specification. It means that content which complies with that standard can be interchanged successfully with any equipment or system that supports the standard. Historically different genres, markets and geographies have adopted different standards which suit their primary aims.

Standards conversion is the umbrella term used to describe the conversion of content from one defined standard to another. This could mean conversion of some or all of the content attributes such as the frame rate, format, dynamic range or colour space.

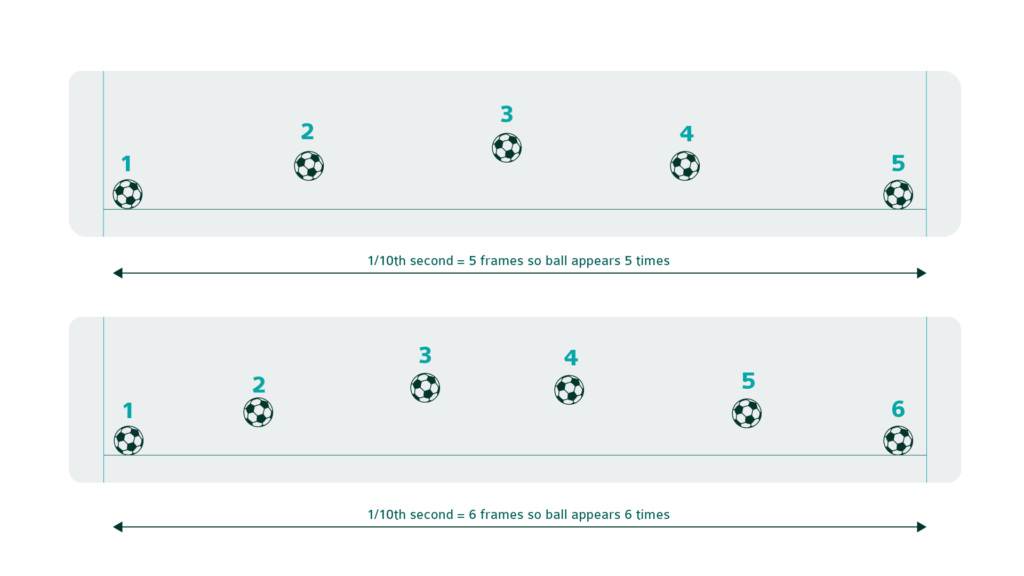

When we watch a movie, a television broadcast or a video stream via our mobile devices we are presented with a series of pictures (or frames) in quick and regular succession. We commonly describe the rate of presentation in frames per second (fps). You may also hear frame rates also specified in Hertz (Hz) but this is only technically true for progressive formats.

Different standards have been introduced over time but usually the content production, post and distribution teams only need to worry about a handful of frame rates – traditionally 23.98, 24, 25, 29.97, 50, 59.94 and 60 Hz. If we could acquire, produce and deliver at the same and only the same frame rate then this would be all be quite straightforward – but this is rarely the case.

Within a project there can be many sources at different frame rates which need to be combined. Even when normalised to a master frame rate, the final program may still require many multiple frame rate versions for different target audiences. The most obvious examples are live events and breaking news, where the originating content needs standards conversion for international broadcast.

Motion compensation is one technique for frame rate conversion and is generally accepted as the best approach to give a smooth and continuous quality output. Motion compensation focuses on analysing motion within the input sequence of frames and estimating where objects would appear in newly created frames.

A typical motion compensated system may use phase correlation as an initial analysis stage, which can be further processed to provide information about the distance and direction of movement of objects in the scene. Assignment of motion vectors against image objects is a highly specialised area and quality of results are subject to the algorithms applied.

InSync Technology are at the forefront of motion compensated frame rate conversion, have many patents in this area and have developed numerous hardware and software solutions – based on this technology.

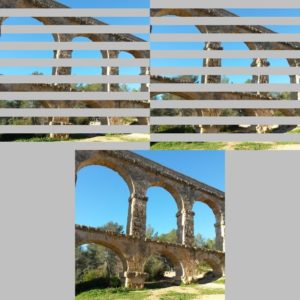

Interlaced video is where an image frame has been acquired in two passes, known as fields. One field contains the odd lines of the scene, and the second field contains the intermediate (or even) lines. Progressive video formats scan every line on every image.Deinterlacing is the conversion of content from an interlaced to progressive video format.

The deinterlacer is usually the first step in any format and frame rate conversion processing, so it is absolutely critical to retain the highest possible quality at this stage. Any defects introduced at this stage will be compounded by onward processing, thereby damaging your precious asset.

It is essential to remember that the two fields that you are using to make one progressive frame are separated in time, so simply combining them can lead to very unpleasant artefacts. Inferior deinterlacing methods can lead to jagged edges, ghosting and picture blur.

A good deinterlacer will apply different processing in moving and stationary areas in order to obtain the maximum possible output resolution.

Typically, movie content is produced at 23.98 or 24 fps, and content for TV or streaming distribution may be 25, 29.97, 50, 59.94 or 60 fps. With the advent of improved camera technology and increases in available bandwidth for storage and transmission, producers are experimenting with higher frame rates such as 100, 119.88 and 120 fps. Research has shown that motion profiles are smoother at higher frame rates, and such productions lend themselves more readily to conversion applications – since lower frame rates can be more easily interpolated.

When discussing aspect ratio, Display Aspect Ratio (DAR) is most commonly implied. DAR is the ratio of the width to the height of the display; examples being 4:3 (used in original SD productions) and 16:9 (now adopted for HD and UHD programming). When film or video material is recorded as a file, it may be stored at a different ratio, known as the Storage Aspect Ratio or SAR. For example, for video shot at 1280×720 pixels, the SAR is 16:9. Many HD storage formats such as DNxHD, AVC HD, and ProRes 422 store HD video as 16:9 such that SAR=DAR (“square pixels”).

However there are a number of frame sizes for which the SAR is not the same as the DAR. These are known as anamorphic frame sizes (with “non-square” pixels). Examples include 1440×1080 and 1280×1080. In these cases, a third type of aspect ratio, Pixel Aspect Ratio (PAR), is defined. The relationship between the three ratios is DAR = PAR x SAR.Movies intended for cinematic distribution may be shot at 1.85:1 or 2.35:1 thus requiring aspect ratio conversion for distribution to viewers typically using 16:9 display screens. Preservation of the entire width of the production may require “letterbox” presentation using black bands above and below the image. Display avoiding the black bands implies cropping the content horizontally, then scaling the picture to occupy the full display height and width.

Some audiences may find letterboxing distracting, especially on small screens as found on aircraft seat backs or mobile phones, so centre-cutting followed by zoom may seem quite appealing. However, the director’s artistic intent may be lost, especially if important action takes place away from the centre of the screen. Manual or automated pan and scan can be beneficial as the editor can choose the most important scene areas, but nevertheless, some of the content will inevitably be lost.

In many standards conversion applications, we need to modify the aspect ratio of the picture. The problem of converting SD 4:3 material to HD 16:9 remains for stored assets, and complex aspect ratio conversions continue to be required when repurposing movie material for TV and mobile distribution.

Movies intended for cinematic distribution may be shot at 1.85:1 or 2.35:1 thus requiring aspect ratio conversion for distribution to viewers typically using 16:9 display screens. Preservation of the entire width of the production may require “letterbox” presentation using black bands above and below the image. Display avoiding the black bands implies cropping the content horizontally, then scaling the picture to occupy the full display height and width.

Some audiences may find letterboxing distracting, especially on small screens as found on aircraft seat backs or mobile phones, so centre-cutting followed by zoom may seem quite appealing. However, the director’s artistic intent may be lost, especially if important action takes place away from the centre of the screen. Manual or automated pan and scan can be beneficial as the editor can choose the most important scene areas, but nevertheless, some of the content will inevitably be lost.

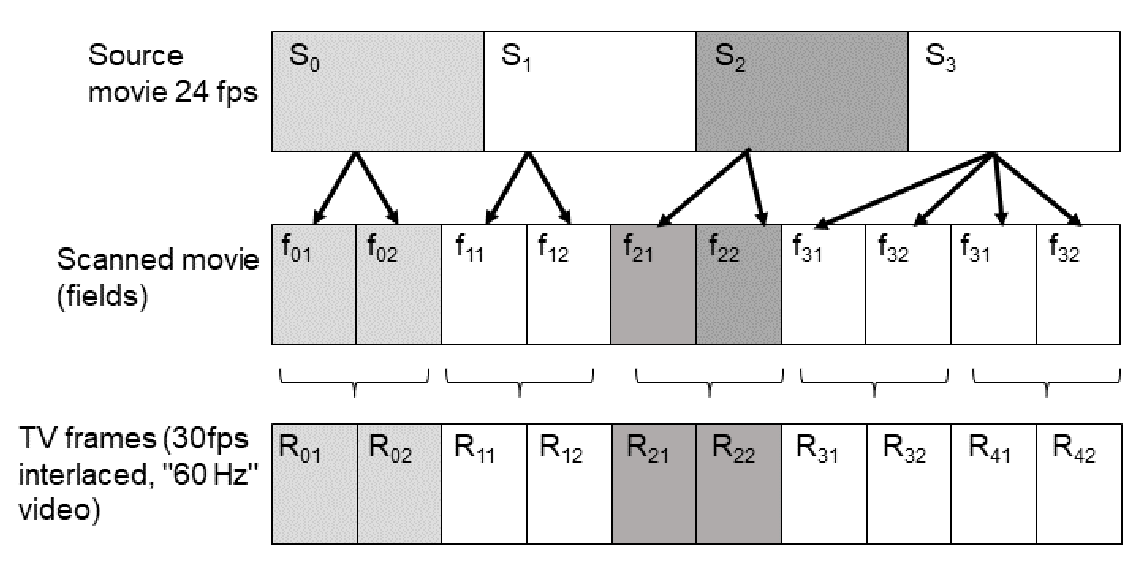

In addition to frame rate, video and movie content is often described by a cadence. In basic terms, this relates to the number of repetitions of a field or frame in a sequence of fields or frames. Frame repetition has been used since the earliest days of cinema where repeated shutter opening and closing was used to reduce flickering. When movies began to be transferred for TV viewing in the US, the need to change from 24 fps to 59.94 fps was solved using a scanning pattern of 2 fields then 3 fields, which is termed “2:3 cadence”.

Interlaced video, being a sequence of odd then even fields (see “What is deinterlacing” above) is termed 1:1. This denotes that the image is refreshed every field i.e. that there are no repeats. Many other cadences are in use such as 2:2 (often used to describe progressive segmented frames) and 5:4 (used in animations).

Dynamic range in production refers to the available range of brightness from black to peak white. High dynamic range (HDR) provides a greater contrast ratio between black and white compared to Standard dynamic range (SDR). The objective of HDR is to enable greater contrast ratios, such that fine variations in blacks can be accommodated at the same time as very bright whites.

HDR can be used to capture effects with a wide range of luminance detail, for example, shining sparkles in dark water, where SDR would either crush all the detail of the water or would lose the intensity of the contrast between the sparkles and the water.

Multiple HDR variants are supported by the standards, the most well-known being Perceptual Quantisation (PQ) and Hybrid Log Gamma (HLG). In an end-to-end system from camera to display, preservation of the director’s artistic intent relies on each component of the video workflow being aware of the content’s dynamic range and processing it appropriately. For example ,if the source content is SDR but is incorrectly treated as HDR within the workflow, then the resultant video will suffer unpleasant artifacts that make the video look over-enhanced.

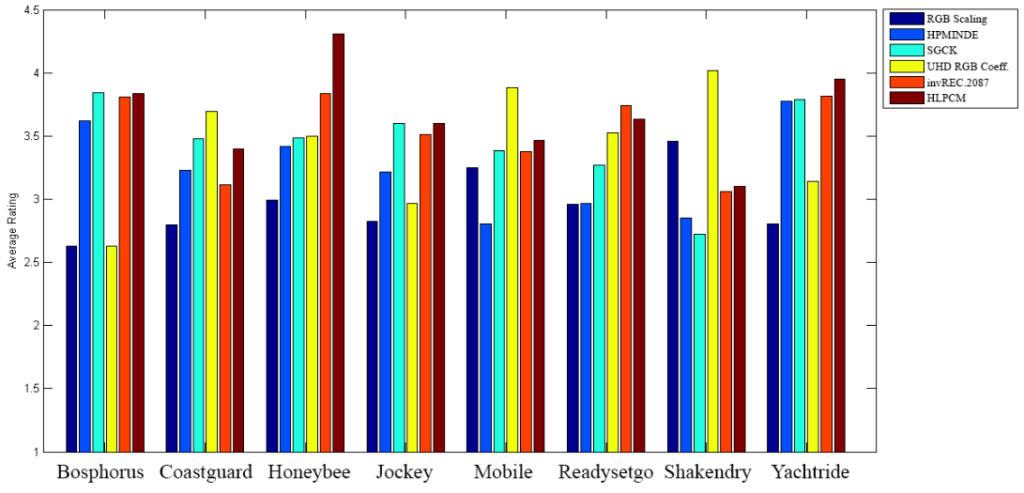

During standards conversion of UHD, it may be necessary to map between different HDR standards, such as PQ to HLG or vice versa. Typically in up or down conversion applications between HD and UHD, mapping between SDR and HDR is required.

Although there are cameras which provide simultaneous SDR and HDR output, mapping a specific SDR acquisition to HDR using one of the standardised curves may not actually look the same as the HDR acquired at the same time if gain has been applied to the SDR version at the camera head. Similarly, an HDR to SDR conversion might have the same problem.

The processing to be applied depends on the intended use of the content. If SDR content is to be integrated into an HDR programme, which may then be distributed in both SDR and HDR, a “round trip” approach should be taken where the SDR content is mapped unchanged into the HDR space. If the programme will remain in HDR, then the SDR content may be contrast enhanced by expanding the luminance range.

When HDR content is mapped to SDR, the process is not reversible. Essentially, the additional contrast ratio available in HDR has to be reduced to fit the SDR space, thereby losing the fine granularity of the brightness levels. HDR mapping into SDR may include soft clipping, where a graduated mapping into the smaller dynamic range is made.

Colour gamut refers to the range of colours which can be managed within a content processing chain. It is limited by prevailing technology, for example, camera sensors or display characteristics. Wide Colour Gamut is a term used to describe an extension of the HD colour gamut (BT.709) to include a much wider range of colours that current technology enables, and is defined in BT.2020.

Using WCG with HDR offers content producers a massive array of artistic options to support their storytelling. As long as the end viewer has a suitably enabled display, pictures can now move closer to realism with a richness and depth not previously available outside the cinema. However, such benefits are lost if the content is badly converted. For example, if the content was produced with WCG but an intervening standards converter treated the video as BT.709, viewing on a BT.2020 compliant monitor will be disappointing, as the colours will appear desaturated.

Yes, there are many hardware-based conversion products available in modular or appliance form. Many of these have been designed and manufactured by InSync Technology on an OEM basis.

A CPU only approach means that the software can run on standard IT servers without the need for supplementary hardware. Requiring access to CPU resources only is a much more accessible, flexible and cost effective approach when there is a need to scale software services on demand. This is particularly true when running software services in cloud based environments.

Since our inception in 2003, InSync has specialised in development of highly efficient signal processing hardware and software products, with a focus on motion compensated frame rate and format conversion (standards converters). We are recognised as Industry leaders in this field, and as media and broadcast evolves, InSync continues to invest and innovate to bring our customers the solutions they need.